In March 2020, Odoo lists official partners in 132 countries, and supports up to 86 different language codes in v13.

What a huge global success!

However, we'll see in this post that some fine tuning is required to get a full support of non-ascii languages in Odoo.

An example to illustrate the limits of default configuration values

In 2019, the European Union and Vietnam signed a Trade Agreement. As a result, we can expect more European companies to be doing business with Vietnamese companies.

Let's take the example of a French company designing clothes which are then produced in a Vietnamese factory. The French company is using Odoo to manage its operations.

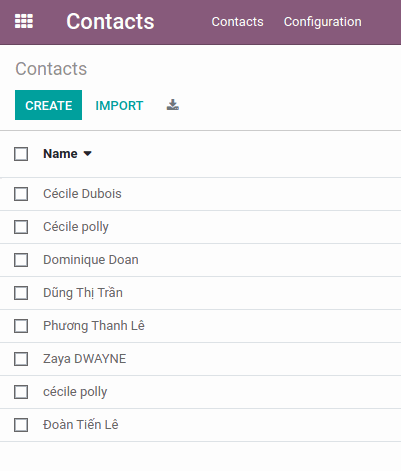

Here is its contacts list:

You might notice a redundant record and inconsistent input conventions, as it is quite common in most companies; but enforcing better practices is not the subject of this post ;-)

Ignore diacritics (accents) in search operations thanks to unaccent

When doing searches amongst records in Odoo, we can't take the assumptions that:

- names have been perfectly inputted, with proper accents

- our memory remembers the exact spelling of names when looking for them

So better make sure that searches operations are accents-insensitive, the same way as they are case-insensitive.

In our case, a search for "Doan" or "Đoàn" should both find partners "Đoàn Tiến Lê" and "Dominique Doan".

unaccent is the PG extension that will help us to achieve this, by removing diacritics (accents) from strings.

How to use it in Odoo?

Odoo comes with native support for unaccent.

Before v14, you needed to explicitly activate it by:

- either adding

unaccent = Trueto your configuration file - or starting odoo with the

--unaccentflag

If extension unaccent is not enabled in the database while its support is activated, Odoo will complain:

2020-03-26 10:54:52,973 41 WARNING v13e odoo.modules.registry: The option --unaccent was given but no unaccent() function was found in database.

Since v14, Odoo will automatically use unaccent if enabled in the database.

Odoo will unaccent all involved strings in queries involving the LIKE/ILIKE operator.

For example:

SELECT "res_partner".id FROM "res_partner" WHERE (("res_partner"."active" = true) AND ((("res_partner"."display_name"::text ilike '%doan%') OR ("res_partner"."ref" = 'doan')) OR ("res_partner"."email"::text ilike '%doan%'))) AND (((("res_partner"."partner_share" IS NULL or "res_partner"."partner_share" = false ) OR ("res_partner"."company_id" in (1))) OR "res_partner"."company_id" IS NULL ) AND ((("res_partner"."type" != 'private') OR "res_partner"."type" IS NULL) OR "res_partner"."type" IS NULL )) ORDER BY "res_partner"."display_name" limit 80will become:

SELECT "res_partner".id FROM "res_partner" WHERE (("res_partner"."active" = true) AND (((unaccent("res_partner"."display_name"::text) ilike unaccent('%doan%')) OR ("res_partner"."ref" = 'doan')) OR (unaccent("res_partner"."email"::text) ilike unaccent('%doan%')))) AND (((("res_partner"."partner_share" IS NULL or "res_partner"."partner_share" = false ) OR ("res_partner"."company_id" in (1))) OR "res_partner"."company_id" IS NULL ) AND ((("res_partner"."type" != 'private') OR "res_partner"."type" IS NULL) OR "res_partner"."type" IS NULL )) ORDER BY "res_partner"."display_name" limit 80How does it work?

unaccent module does not use Unicode normalization, but only a simple search-and-replace dictionary

This dictionary is created from a simple replacements flat file.

In the past, that flat file didn't contain Vietnamese characters. But they've been included in 2017, and are thus supported since PG 11.

How to install it?

The unaccent.rules file is installed in $SHAREDIR/tsearch_data/ (e.g. /usr/share/postgresql/11/tsearch_data/) by the server package (e.g. postgresql-11).

But somehow, the unaccent extension itself is part of the postgresql-contrib package, so make sure to have it installed as well (e.g. postgresql-11-contrib).

Restart Postgresql if you add more characters manually.

How to enable it?

unaccent extension needs to be enabled per database:

psql -d $DB -c "CREATE EXTENSION unaccent;"

To check if it's enabled properly:

psql -d $DB -c "SELECT proname FROM pg_proc WHERE proname='unaccent';"

To check it it's working:

psql -d $DB -c "SELECT unaccent('Đoàn');"

It should output "Doan".

How to disable it?

Simply execute:

psql -d $DB -c "DROP EXTENSION unaccent;"

Make sure that results are sorted in the expected order: collations

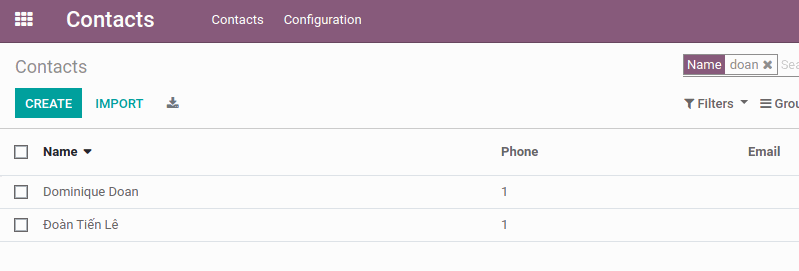

As expected, a search for "doan" now returns "Đoàn Tiến Lê" and "Dominique Doan":

However, we now need to make sure that results are properly ordered, which is not the case.

It is more obvious with the full contact list:

"Đoàn Tiến Lê" appears at the end of the list, which is pretty confusing.

This is because of the collation of the database.

What are collations?

In computer science, a "collation" is a set of rules to sort strings. For example:

- rule 1: numbers should appear before chars

- rule 2: uppercase chars should appear before lowercase chars

The environment variable LC_COLLATE defines which locale's collation should be used.

Let's compare some of them:

$ LC_COLLATE=C sort unsorted_names > sorted_names_C

$ LC_COLLATE=en_US.UTF-8 sort unsorted_names > sorted_names_en_US.UTF-8

$ LC_COLLATE=fr_FR.UTF-8 sort unsorted_names > sorted_names_fr_FR.UTF-8

$ LC_COLLATE=vi_VN.UTF-8 sort unsorted_names > sorted_names_vi_VN.UTF-8

$ unidecode unsorted_names | tr a-z A-Z | sort > sorted_names_no_accent_uppercase

$ cat sorted_names_C

Cécile Dubois

Cécile polly

Dominique Doan

Dũng Thị Trần

Phương Thanh Lê

Zaya DWAYNE

cécile polly

Đoàn Tiến Lê

$ cat sorted_names_en_US.UTF-8

Cécile Dubois

cécile polly

Cécile polly

Đoàn Tiến Lê

Dominique Doan

Dũng Thị Trần

Phương Thanh Lê

Zaya DWAYNE

$ cat sorted_names_fr_FR.UTF-8

Cécile Dubois

Cécile polly

Dominique Doan

Dũng Thị Trần

Phương Thanh Lê

Zaya DWAYNE

cécile polly

Đoàn Tiến Lê

$ cat sorted_names_vi_VN.UTF-8

Cécile Dubois

cécile polly

Cécile polly

Dominique Doan

Dũng Thị Trần

Đoàn Tiến Lê

Phương Thanh Lê

Zaya DWAYNE

$ cat sorted_names_no_accent_uppercase

CECILE DUBOIS

CECILE POLLY

CECILE POLLY

DOAN TIEN LE

DOMINIQUE DOAN

DUNG THI TRAN

PHUONG THANH LE

ZAYA DWAYNEWe can observe that each locale applies slightly different rules:

- C/fr_FR.UTF-8: puts ascii uppercase chars first, which is very confusing when not all names are capitalized properly: "cécile polly" appears at the end of the list

- C/fr_FR.UTF-8: Đ > E, Đoàn appears after Zaya, at the end of the list

- en_US.UTF-8/vi_VN.UTF-8: E > Đ, Đoàn appears before Zaya

- vi_VN.UTF-8: Đ > D, Đoàn appears after Dũng

- (...)

- you can find an exhautive list of concepts here

Regarding the Vietnamese language, here are some more interesting resources:

- traditional collation chart

- modern collation chart: doesn't contain contractions anymore, so "chó" should collate before "có" now

Collations in PG

The collation rules will be applied when the sql query contains an ORDER BY.

PG supports any locale that is available on the host system.

But the LC_COLLATE and LC_CTYPE settings of a database can't be changed after its creation.

Collations in Odoo

Actually, PG allows to change the collation on-the-fly at query level, but Odoo doesn't take advantage of it yet. Tecnativa (an odoo integrator from Spain, known for its strong technical team) suggested that the used collation could be changed dynamically depending on the user's locale, or at least defined on database creation, but Odoo SA R&D team decided it was not worth the effort.

Since v12, Odoo enforces LC_COLLATE=C for databases created via the databases manager, but only when db_template parameter has not been customized.

Impact of collations on performances

This LC_COLLATE=C choice is mostly motivated by performances considerations, as discussed in the PR.

This is a trade-off:

- performances over sort order

- trying to limit disk space used (indexes can be costly)

Odoo SA has a little bias when taking this kind of decision: they operate one of the biggest odoo instance: odoo.com, which is said to have more than 3M+ attachment records.

Attachments are a concern because the ir.attachment table is used by Odoo to store the assets bundles. Here's an example of query (simplified here) that happens when assets have to be regenerated:

SELECT "ir_attachment".id FROM "ir_attachment" WHERE ("ir_attachment"."url"::text like '/web/content/%-%/web.assets_backend%.js') ORDER BY "ir_attachment"."id" DESC;

You can notice the LIKE operator on the url column.

Here's the query plan on a 30k attachments db, created with LC_COLLATE=C:

# EXPLAIN (ANALYZE, VERBOSE, BUFFERS) SELECT "ir_attachment".id FROM "ir_attachment" WHERE ("ir_attachment"."url"::text like '/web/content/%-%/web.assets_backend%.js') ORDER BY "ir_attachment"."id" DESC;

Sort (cost=20.06..20.06 rows=3 width=4) (actual time=0.139..0.140 rows=1 loops=1)

Output: id

Sort Key: ir_attachment.id DESC

Sort Method: quicksort Memory: 25kB

Buffers: shared hit=15

-> Index Scan using ir_attachment_url_index on public.ir_attachment (cost=0.29..20.03 rows=3 width=4) (actual time=0.067..0.085 rows=1 loops=1)

Output: id

Index Cond: (((ir_attachment.url)::text >= '/web/content/'::text) AND ((ir_attachment.url)::text < '/web/content0'::text))

Filter: ((ir_attachment.url)::text ~~ '/web/content/%-%/web.assets_backend%.js'::text)

Rows Removed by Filter: 25

Buffers: shared hit=12

Planning Time: 0.771 ms

Execution Time: 0.194 ms

And now here's the same query plan on the same 30k attachments db, but created with LC_COLLATE=en_US.UTF-8:

# EXPLAIN (ANALYZE, VERBOSE, BUFFERS) SELECT "ir_attachment".id FROM "ir_attachment" WHERE ("ir_attachment"."url"::text like '/web/content/%-%/web.assets_backend%.js') ORDER BY "ir_attachment"."id" DESC;

Sort (cost=1774.89..1774.89 rows=3 width=4) (actual time=15.949..15.950 rows=1 loops=1)

Output: id

Sort Key: ir_attachment.id DESC

Sort Method: quicksort Memory: 25kB

Buffers: shared hit=1305

-> Seq Scan on public.ir_attachment (cost=0.00..1774.86 rows=3 width=4) (actual time=15.922..15.932 rows=1 loops=1)

Output: id

Filter: ((ir_attachment.url)::text ~~ '/web/content/%-%/web.assets_backend%.js'::text)

Rows Removed by Filter: 36836

Buffers: shared hit=1305

Planning Time: 0.273 ms

Execution Time: 15.996 ms

With a LC_COLLATE different then C, the query does not use the expected "ir_attachment_url_index" btree index: it does a sequential scan on the table to find matching rows. This is a known limitation:

if your database does not use the C locale you will need to create the index with a special operator class to support indexing of pattern-matching queries

So the workaround is to create an additional btree index in addition to the default one, but with a different operator then default:

CREATE INDEX ir_attachment_url_index_like ON ir_attachment (url text_pattern_ops);

This index is used indeed:

# EXPLAIN (ANALYZE, VERBOSE, BUFFERS) SELECT "ir_attachment".id FROM "ir_attachment" WHERE ("ir_attachment"."url"::text like '/web/content/%-%/web.assets_backend%.js') ORDER BY "ir_attachment"."id" DESC;

Sort (cost=26.93..26.93 rows=3 width=4) (actual time=0.092..0.092 rows=1 loops=1)

Output: id

Sort Key: ir_attachment.id DESC

Sort Method: quicksort Memory: 25kB

Buffers: shared hit=13 read=2

-> Bitmap Heap Scan on public.ir_attachment (cost=1.62..26.90 rows=3 width=4) (actual time=0.080..0.083 rows=1 loops=1)

Output: id

Filter: ((ir_attachment.url)::text ~~ '/web/content/%-%/web.assets_backend%.js'::text)

Rows Removed by Filter: 25

Heap Blocks: exact=13

Buffers: shared hit=13 read=2

-> Bitmap Index Scan on ir_attachment_url_index_like (cost=0.00..1.62 rows=23 width=0) (actual time=0.035..0.035 rows=26 loops=1)

Index Cond: (((ir_attachment.url)::text ~>=~ '/web/content/'::text) AND ((ir_attachment.url)::text ~<~ '/web/content0'::text))

Buffers: shared read=2

Planning Time: 0.533 ms

Execution Time: 0.132 ms

Note that it will only be used on LIKE/ILIKE queries that start by a fixed prefix, such as /web/content in this case.

To optimize searches that would start by a wildcard, typically keyword(s) searches, we would need to dive into full text search features of PG: this will be for another article ;-)

Let's wrap it up: our final strategy

Use db_template = template1

Odoo uses the db_template config parameter to define the database template from which new databases are created.

Since v12, default value for db_template is template0.

On our side, we use db_template = template1, which can be customized contrarily to template0. This allows us to define the appropriate defaults for new databases.

Get unaccent enabled by default in all databases

We want unaccent support to be enabled by default in all our databases.

So when provisionning our hosts, we enable unaccent in template1: psql -d template1 -c "CREATE EXTENSION unaccent;", after Postgresql server installation.

Note that starting from Odoo v14, unaccent will be enabled automatically by Odoo in new databases when unaccent = True.

But we will keep enabling unaccent in template1, for databases that would be created from the command line (createdb).

Use the most appropriate collation by default

In our case, we expect results to be sorted as if no accent nor case were involved at all, as this is the most intuitive order for our users.

The only collation that produces results matching these expectations is en_US.UTF-8, as seen above.

On Postgresql server installation, initdb creates template1 with the system locale.

So when provisionning our hosts, we configure en_US.UTF-8 as the default locale (/etc/default/locale), before Postgresql server installation.